AI & UX: from strategy to execution

A three-part series on successfully implementing artificial intelligence in user experiences.

These three articles form a guide to designing AI experiences that truly add value. From strategic scoping to practical implementation and critical evaluation, each article builds on the previous one and offers concrete guidance for organizations that want to use AI without falling into common pitfalls.

AI Experience Audit: what works and what doesn’t?

The implementation of artificial intelligence is in a transition phase between hype and maturity. This often leads to chaos, with organisations experimenting and pushing the boundaries of UX. That’s why a thorough audit of both successful and frustrating AI experiences is essential to determine when AI truly adds value to the user experience. Let’s start by looking at AI interfaces that don’t quite work.

What failed AI experiences teach us

Failed AI implementations often share the same underlying issues: unrealistic expectations, poor transparency, or unclear functionality.

- Rufus: Amazon’s AI bot Rufus caused significant confusion among users. While people were typing in the search bar, AI suggestions appeared underneath the standard autocomplete results. Amazon also placed the bot in a prominent but unusual location in the top navigation. Research showed that users didn’t notice the button at all while shopping. This illustrates that when an AI feature is positioned based on business priorities rather than usability, it risks becoming completely undiscoverable.

2. Lack of guidance: Because many AI products are too broadly defined, users often don’t know how to get started — raising the threshold to ask a question. When users are greeted with something like “How can Claude help today?”, there’s no clear direction. If the system then fails, users don’t know whether they did something wrong or the model simply can’t handle the task, leading to frustration and loss of trust.

3. Opt-out friction: A lack of choice around using AI is a common UX mistake. Slack implemented an AI feature based on a “quiet opt-in”, requiring users to email support to opt out. Not providing simple opt-in/opt-out controls harms trust and is a textbook example of poor UX.

Examples of strong AI integration

Seamless integration usually happens when the AI tool solves a clear, specific problem.

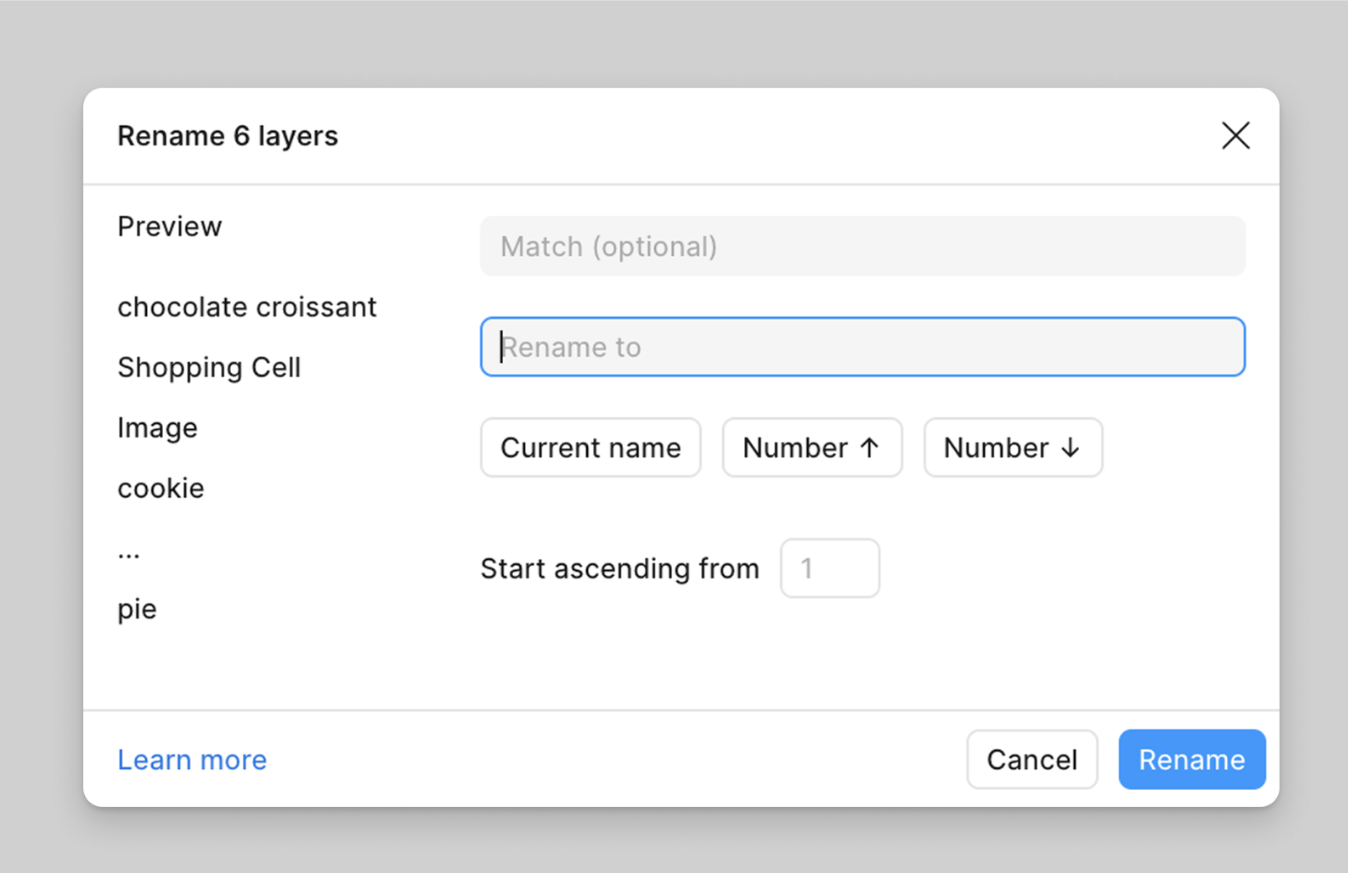

1: Rename Layers: This is an excellent example of a well-implemented AI feature. It starts with a concrete, everyday annoyance for designers: renaming layers. The function is clearly scoped and provides instant value.

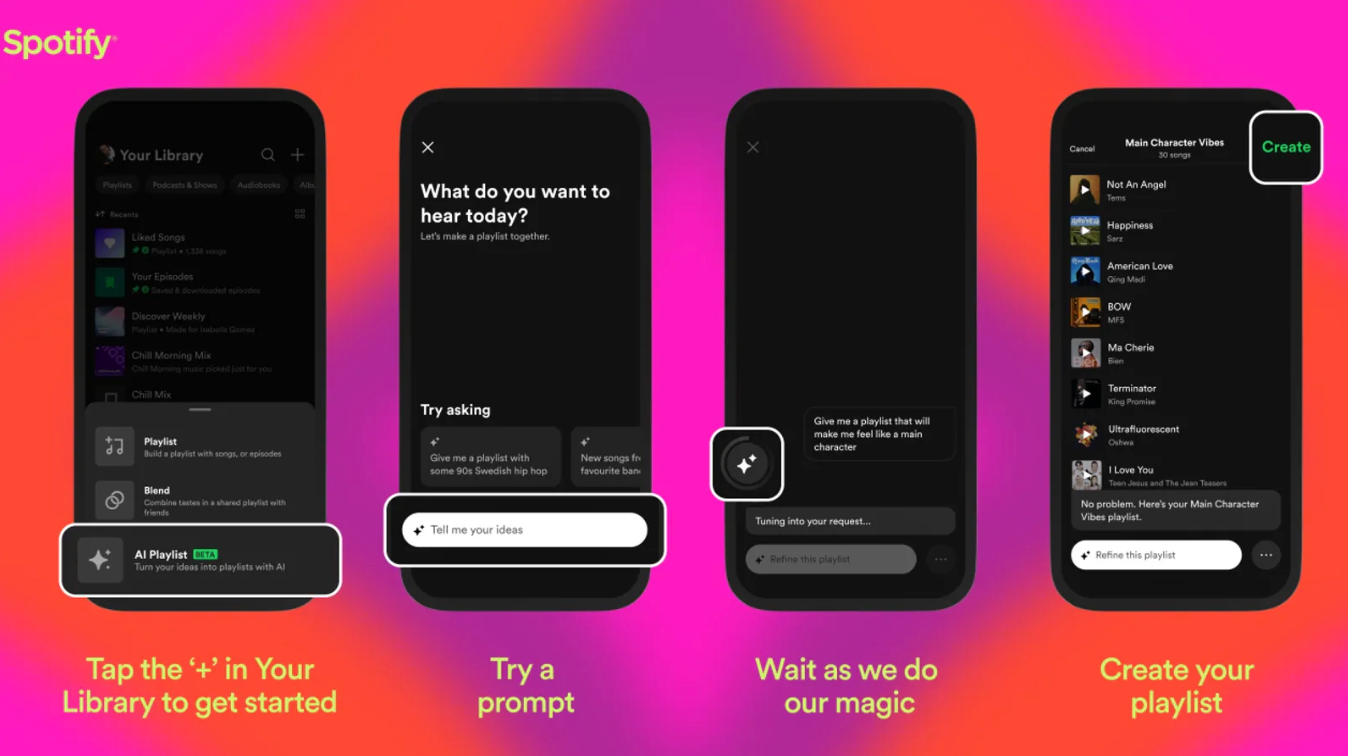

2. AI Playlists: Spotify’s AI-powered playlists deliver immediate results and allow users to give explicit feedback (such as marking a song as something they don’t want recommended again). This gives users a sense of control — essential for building trust in AI-driven suggestions.

3. Transparency through sourcing: Tools like Elicit and NotebookLM show where their information comes from. Source transparency increases user confidence in the output. These tools also help users structure their thinking, which improves interaction with the AI system. With citations visible, users can verify the accuracy of the information provided.

4. Visible reasoning: Perplexity displays “thinking” or “reasoning” indicators while generating an answer. These signals show that the system is gathering, analysing or evaluating information. For example, it may indicate that it’s consulting additional sources, requires multiple steps, or needs more time for a complex request. This helps users understand what’s happening behind the scenes, improving transparency and perceived control.

How do you measure the success of AI features?

In article 1 we discussed how to properly scope AI, and in article 2 we explored best practices for designing an AI feature. But how do you measure whether that feature is actually achieving its intended goals?

1: Measuring user trust: Trust is crucial, yet difficult to quantify. A user’s mental model plays a major role here — the internal understanding of how a system works, what it can do and what it cannot do. This mental model strongly influences how much trust a user places in the output.

User trust can be strengthened through:

- Qualitative research: User interviews, observations and competitive research offer insight into how users think, what assumptions they hold about AI and where their doubts lie. This helps clarify expectations and mental models early in the process.

- Wizard of Oz testing: Users interact with a prototype that appears to be powered by AI, while a human provides the responses behind the scenes. This allows teams to quickly test how users respond to conceptual AI features and how much trust they have in the output — without needing a technically functional model yet.

- Confidence indicators: Showing confidence scores or uncertainty indicators creates more transparent interfaces. When users can see how certain the model is, they can better judge when to trust the output and when to double-check it — which ultimately strengthens trust in the system.

2: Performance and fail metrics: To understand how well AI works for users, we need to look beyond basic metrics like usage or retention. It’s essential to measure how often AI makes mistakes and how reliable its output is:

- Hallucination rate: How often does the AI produce incorrect or fabricated answers? This includes made-up facts, names or places.

- Accuracy of the output: Ensuring that the AI provides correct and useful information. This includes interpretation errors, incomplete answers or irrelevant output.

- User experience (UX): Measuring how users experience the AI: whether they understand what the AI is doing, whether feedback is clear and whether they can follow the process with ease.

From strategy to execution

The question is no longer whether to use AI in your product, but how to do so responsibly. Hopefully, the examples in this article offer a practical checklist: Is the problem clearly defined? Can users verify the output? Is there room for feedback and control?

Feeling lost? Follow these steps:

- Audit your current AI implementations using the success patterns as a benchmark.

- Test with real users before launching (Wizard of Oz testing is invaluable).

- Measure not just usage, but trust and fail rates.

Artificial intelligence in UX is an iterative process. Start experimenting, learn from what doesn’t work, and build step by step towards experiences that genuinely help users.